Posted on 28 May 2015

We recently started migrating a page with filtering over to elasticsearch. One of the filtering options allows you to search for someone’s name, to make this a nicer experience we added autocompletion for that name. To help with this we used the chewy gem.

Before we get started, this is a prefix autocompletion. So if you search for compl it will suggest complete and not autocomplete.

PersonIndex

class PersonIndex < Chewey::Index

define_type Person do

field :first_name

field :last_name

field :name_autocomplete, {

value: -> {

{

input: [first_name, last_name],

output: full_name,

context: {

group_id: group.id,

},

}

},

type: "completion",

context: {

group_id: {

type: "category",

},

},

}

end

end

First we need to set up the index. With chewy we define PersonIndex and fill in fields we want the index to have.

To create a completion field we give it a special value that contains the input to match against and the output elasticsearch will give after matching. We can also specify a context because we wanted to limit the completion to certain groupings and not suggest everything.

We also define type as completion and let elasticsearch know that this field has a context of group_id.

Suggestions

PersonIndex.suggest({

suggest: {

text: "Eri",

completion: {

field: "name_autocomplete",

context: {

group_id: group.id,

},

},

},

}).suggest

In order to get suggestions out of elasticsearch we use the .suggest method off of the index. The top level key is required and can be anything you want. It will match up with the output key.

Inside the top level key you pass in the text you want to complete against along with what field and any context. Elasticsearch will return JSON similar to below:

Suggestion JSON

{

"_shards": {

"total": 5,

"successful": 5,

"failed": 0

},

"suggest": [

{

"text": "Eri",

"offset": 0,

"length": 3,

"options": [

{

"text": "Eric Oestrich",

"score": 1

}

]

}

]

}

The options array will contain any records that matched against your text. The text inside of options will be the output you specified when creating the value for that record.

If you don’t want to use chewy for suggestions, after setting up the index you can query elasticsearch with the _suggest route off of your index:

GET person/_suggest

{

"suggest": {

"text": "Eri",

"completion": {

"field": "name_autocomplete",

"context": {

"group_id": 1

}

}

}

}

Posted on 11 May 2015

For a while I have wanted to start learning C, but I have always been daunted by compiling a project that is larger than 1 source file. I started reading 21st Century C and it finally got me past the hurdle of autotools for compiling C projects. These are the scripts I ended up with to start a simple project.

.

├── AUTHORS

├── autogen.sh

├── ChangeLog

├── configure.ac

├── COPYING

├── INSTALL

├── Makefile.am

├── NEWS

├── README

└── src

├── main.c

└── Makefile.am

AUTHORS, ChangeLog, COPYING, INSTALL, NEWS, and README are required by autotools before it will generate the configure script. Right now I have them as empty files to move along.

autogen.sh

#!/bin/sh

# Run this to generate all the initial makefiles, etc.

set -e

autoreconf -i -f

I run this script after checking out the project on a new machine. Autotools will spit out all of the required files to let it compile. Your project folder will have a lot of new files that can be ignored.

# -*- Autoconf -*-

# Process this file with autoconf to produce a configure script.

AC_PREREQ([2.69])

AC_INIT([prog], [1], [eric@oestrich.org])

AM_INIT_AUTOMAKE

AC_CONFIG_SRCDIR([src/main.c])

AC_CONFIG_HEADERS([src/config.h])

# Checks for programs.

AC_PROG_CC

AC_PROG_CC_C99

# Checks for libraries.

# Checks for header files.

# Checks for typedefs, structures, and compiler characteristics.

# Checks for library functions.

AC_CONFIG_FILES([

Makefile

src/Makefile

])

AC_OUTPUT

This file generates the configure script. It expands from macros into the bash script. The best way I found to expand this file is looking at other projects and see what they use. For instance, checking to see if glib-2.0 is available and can be compiled against:

AC_CHECK_LIB([glib-2.0], [g_free])

Makefile.am

This sets up automake to look in the src subfolder. Most of the work will happen in there.

src/Makefile.am

bin_PROGRAMS = prog

prog_CFLAGS = # `pkg-config --cflags glib-2.0`

prog_LDFLAGS = # `pkg-config --libs glib-2.0`

prog_SOURCES = main.c # list out all source files

bin_PROGRAMS tells automake what binary files will be generated. prog_CFLAGS and prog_LDFLAGS sets up the CFLAGS and LDFLAGS appropriately. This is where you will add -I and -l flags. prog_SOURCES are what sources gets compiled into prog. The last three lines are named after the binary file that is generated.

All together

Once these files are in place you will be able to clone the repo, and within a few simple commands be compiling your project. Below is the full set of commands it takes.

$ git clone $GIT_REPO git_repo

$ cd git_repo

$ ./autogen.sh

$ ./configure

$ make

$ src/prog

With this set up I have been able to get past the big hurdle of compiling multiple C source files, and start learning C itself. I hope this helps others get over the hurdle as well.

Posted on 30 Apr 2015

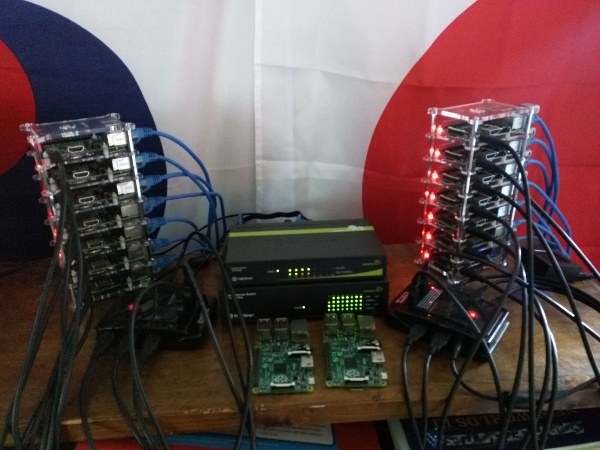

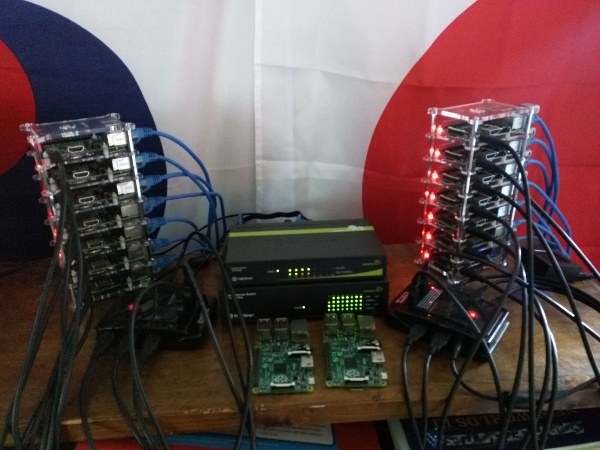

So far I’ve shown off the nitty gritty of my bramble. In this post I want to give an overview of it.

All of the pis live on a separate 192.168.x.x than the rest of my network. This allows me to easily identify them. The entire network is under a CIDR /16 subnet so everything can talk to everything else.

The case I picked for the pi lets me stack to 7 comfortably. 7 also happens to be the number of ports on the USB hub. The ethernet switch, which has 16 ports, is also set up nicely with 7. You can fill the switch and still have room for connecting to other switches for chaining.

Hosts

I have a few types of hosts: build, redis/memchaced, postgres, file server, and docker host. All of the hosts are labeled. If I didn’t label them they would be instantly lost if I tried to find a single one.

Build server

This server creates the docker images that are hosted in the registry also located here. Every docker host pulls from its registry. More information about the build server is located in the Git Push with Docker post.

Redis/Memcached

I originally had these as separate servers, but I had so much free memory that it made sense to shove them together and get another docker host out of it.

Postgres

This server has postgres installed. I have it listening on IP and only allowing the Raspberry Pi subnet to talk to it. Backups are manual at the moment, and given the ability of the Raspberry Pi to corrupt the memory card it’s top of my mind to fix the situation.

File Server

These servers (4) are running gluster. They are set up to host volumes with a redundency of 2. I have tested this failure rate when one of the raspberry pis got a corrupted file system. Upon restoring a fresh install of linux I corrupted the gluster cluster almost to the point of total loss.

Docker

There are 7 of these right now. The last 2 are empty and test beds for updating Arch. They simply have docker installed and will pull down images and run them. More information about these are located in these posts: Docker Raspberry Pi Images, nginx Docker Container, and Git Push with Docker.

Improvements

There are a few improvements I’d like to do with the bramble in a more general sense. Cable management is one of my top concerns. All of the cables I have are about 3ft long, which I had bought before the cases and new what the final setup looks like. Getting shorter cables will help make the bramble look nicer than it currently does.

I’d also like to set up a few more services to go with postgres, redis, and memcached. I think it would be cool to eventually set up elasticsearch. I have a project that currently uses regular postgres text search, but having set up elasticsearch once it would be nice to have a side project that used it.

General logging is also something I want. Right now logs are very hard to get to. There is no heroku logs --tail. I want to set up something like graylog2 or if I get elasticsearch up logstash. Most of the problem with getting these going is the current docker images are built for x86 not ARM. I just haven’t had time to look into this yet.

Posted on 17 Apr 2015

I recently got to use the new FusedLocationApi from Google Play Services on Android. This is something I have tried in the past, but all of the Android documentation refers to the deprecated LocationClient. I wanted to figure out how to use the new API so I did not need to update this project again.

public class MainActivity extends Activity implements

GoogleApiClient.ConnectionCallbacks,

GoogleApiClient.OnConnectionFailedListener {

private GoogleApiClient mGoogleApiClient;

Button mLocationUpdates;

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

mGoogleApiClient = new GoogleApiClient.Builder(this)

.addApi(LocationServices.API)

.addConnectionCallbacks(this)

.addOnConnectionFailedListener(this)

.build();

mLocationUpdates = (Button) findViewById(R.id.location_updates);

// Disable until connected to GoogleApiClient

mLocationUpdates.setEnabled(false);

}

@Override

public void onConnected(Bundle bundle) {

mLocationUpdates.setEnabled(true);

mLocationUpdates.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

locationRequest();

}

});

}

private void locationRequest() {

LocationRequest request = new LocationRequest()

.setInterval(1000) // Every 1 seconds

.setExpirationDuration(60 * 1000) // Next 60 seconds

.setPriority(LocationRequest.PRIORITY_HIGH_ACCURACY);

Intent intent = new Intent(this, LocationUpdateService.class);

PendingIntent pendingIntent = PendingIntent

.getService(this, 0, intent, PendingIntent.FLAG_UPDATE_CURRENT);

LocationServices.FusedLocationApi

.requestLocationUpdates(mGoogleApiClient, request, pendingIntent);

}

}

This starts by creating a GoogleApiClient and setting connection callbacks to the activity. Once the GoogleApiClient is connected it calls onConnected and we enable the button that will start tracking location. I used the IntentService of receiving updates for this as it was simpler and worked for what I wanted. We create a LocationRequest whenever the button is pressed and ask for updates every second for 60 seconds.

public class LocationUpdateService extends IntentService {

public LocationUpdateService() {

super("LocationUpdateService");

}

@Override

protected void onHandleIntent(Intent intent) {

Bundle bundle = intent.getExtras();

if (bundle == null) {

return;

}

Location location = bundle.getParcelable("com.google.android.location.LOCATION");

if (location == null) {

return;

}

// Deal with new location

}

}

The LocationUpdateService is very simple and pulls the new updates out of the Intent it gets passed.

Take Aways

I liked doing the IntentService route since we didn’t have to worry about the activity being killed to keep receiving updates to location. It also got around the Android 5.1 “feature” of knocking all alarms to a 60 second interval no matter how small of a frequency you actually requested (to fetch getLastLocation() from the LocationClient as I had previously used.)

Posted on 30 Mar 2015

For my Raspberry Pi bramble, I have a build server that builds all of my docker containers and then deploys them. This is how I have that set up.

Folder Structure

On the build server I have a deploy user that has the following folder structure:

~/apps/

project/

repo/

build/

config/

The repo folder contains a bare git repository that git clients will push to similar to Heroku and Github. The build folder is a clone of the repo with a working copy for docker to use as a build context. Lastly the config folder contains any configuration that will be copied into the docker container as necessary, such as a .env file.

post-receive hook

When a git client pushes to the server a post-receive hook is called. This is the script. The script is located at repo/hooks/post-receive and needs to be executable by the deploy user.

APP_PATH=/home/deploy/apps/project

DOCKER_TAG="registry.example.com/project/web"

REPO_PATH=$APP_PATH/repo

BUILD_PATH=$APP_PATH/build

CONFIG_PATH=$APP_PATH/config

unset GIT_DIR

cd $BUILD_PATH

git fetch

git reset --hard origin/master

cp $CONFIG_PATH/.env $BUILD_PATH/.env

docker build -t $DOCKER_TAG .

docker push $DOCKER_TAG

echo 'Restarting on docker-host'

# Double quotes are necessary for the next line to interpolate

# the $DOCKER_TAG variable

ssh deploy@docker-host.example.com "docker pull $DOCKER_TAG"

ssh deploy@docker-host.example.com "sudo systemctl restart project"

The first half of the script sets up variables and should be self explanatory. Unsetting GIT_DIR is required because otherwise git will think you are still in the bare repo and complain about not having a working directory. The repository is reset completely to whatever is now master and then build with docker.

The docker tag is important because it needs to be named however you access your docker repository. Mine for instance is at reigstery.example.com.

Once built and pushed to the docker registry, I pull the new docker image on the remote docker host and restart the project via systemd.

Project docker file

For the project docker file see my post on running a rails app in docker for a full explanation. Below is just the project Dockerfile.

FROM oestrich/base-pi-web

MAINTAINER Eric Oestrich <eric@oestrich.org>

RUN mkdir -p /apps/project

ADD Gemfile* /apps/project/

WORKDIR /apps/project

RUN bundle -j4 --without development test

ADD . /apps/project

RUN . /apps/project/.env && bundle exec rake assets:precompile

RUN . /apps/project/.env && bundle exec rake db:migrate

ENTRYPOINT ["foreman", "start"]

The Gemfile is added first to allow docker to skip reinstalling gems if it never changes. This is incredibly useful as it takes a very long time to build on the Raspberry Pi.

Asset precompilation comes next along with the database migration. I include a database migration each build because I don’t have a nice way to access the database server with a production rails app right now.

The entrypoint is set to foreman start and the app will be launched with a CMD of web or worker.

systemd service file

This is my systemd service that is installed on the docker hosts to keep the rails app alive.

[Unit]

Description=Project Web

Requires=docker.service

After=docker.service

[Service]

ExecStart=/usr/bin/docker run --rm -p 5000:5000 --name project-web registry.example.com:5000/project/web web

Restart=always

[Install]

WantedBy=multi-user.target

This file is very simple and keeps the docker container alive no matter what.

Future improvements

While this works very well for what I want (a small cluster in my house), there are a number of improvements I’d like to eventually get to. I’d really like to be able to use CoreOS and all of the cool tools it comes with. I can’t at the moment because CoreOS does not support the ARM platform.

Some of the neat tools include etcd and fleet to allow me to more easily deploy containers to the bramble without having to know exactly what host it will be on like above. This is the biggest improvement I would like to get.

I’d also like to be able to cache gem building for rails apps. Building nokogiri on a Raspberry Pi takes about half an hour. Since I have no caching in place, any time the Gemfile changes it requires a complete rebuild of all gems. I think I can fix this by using glusterfs (which I already have in place for uploads.) This might be a little slow, but I’m hoping it will still be faster than a complete rebuild. There is a similar problem with asset compilation.

In future blog posts I hope to address these problems with what I have found.